wiki page for convolution which has a detailed description of Convolution. In simple words, convolution is a mathematical operation, which applies on two values say f and g and gives a third value as an output say v. In convolution, we do point to point multiplication of input functions and gets our output function.

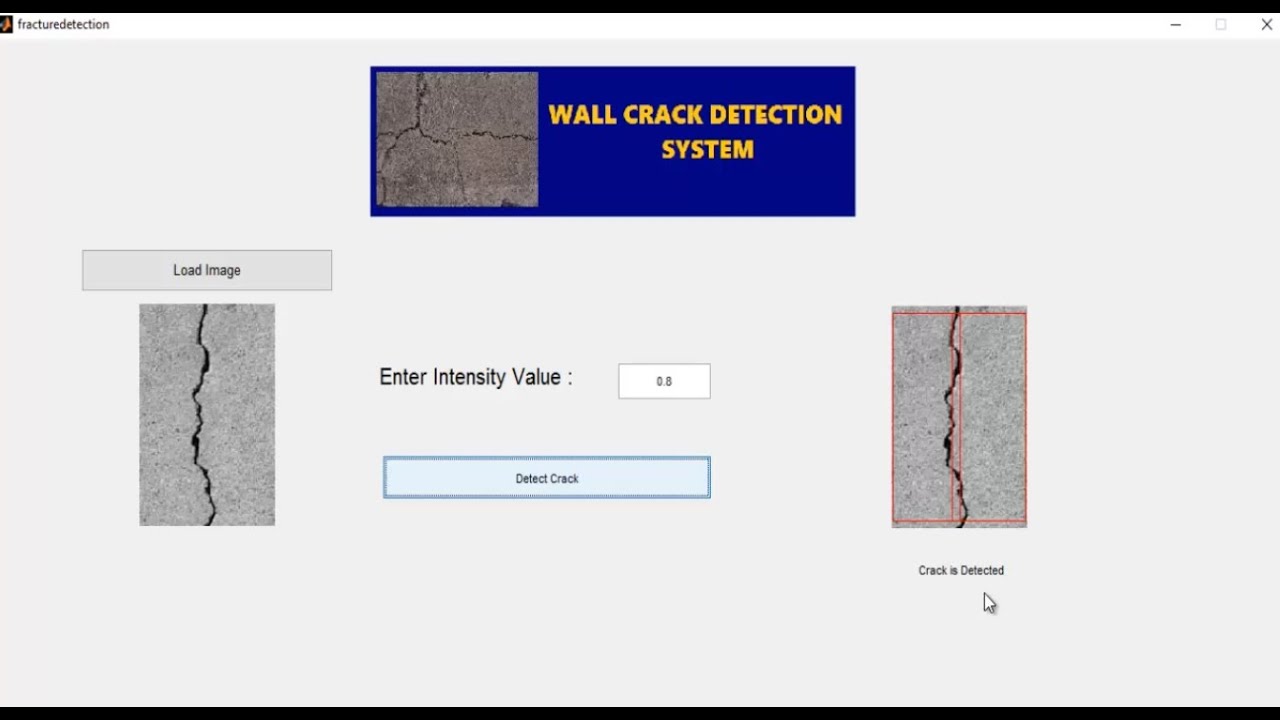

My aim is to develop the SIMPLEST matlab code for automatic detection of cracks and estimate the length of the crack (if possible other geometrical properties) from a sample image. The proposed algorithm in this work improves upon existing work by integrating crack detection with a crack mapping using image segmentation and classification within a CNN architecture. In addition, the optimized CNN architecture proposed here uses a significantly lower number of filters in the convolution layer (256) leading to reduced.

Convolution is an important technique and is used in many simulating projects. It has a vital importance in image processing. So, today we are gonna do convolution in MATLAB and will check the output. You should also check Image Zooming with Bilinear Interpolation in MATLAB in which we have used correlation technique which is quite similar to convolution. It will give you a better idea of convolution, I recommend you to read their difference. Anyways, coming back to our today's Convolution Calculator, let's start its designing:Convolution Calculator in MATLAB

- The above code for convolution calculator is quite self explanatory but let me explain it a little.

- First of all, I am asking for inputs from user and they are saved in variables named as x and h.

- After that I am plotting them using stem function.

- In the next section, I have used the default MATLAB command for Convolution and calculated the convolution of x and h and saved it in v.

- Next I applied my simple algorithm and calculated convolution of x and h and saved it in Y and also plotted it.

- Once you run the simulation and give your input functions, you will get the below results:

- You can see in the above figure that I have given two inputs x and h and MATLAB Convolution Calculator calculated the convolution and gave us v and Y.

- v is calculated by default MATLAB command while the Y is calculated by our small convolution algorithm.

- Their graph representation is shown in the below figure:

- You can see in the above figure that we have plotted all the four signals, two inputs and two outputs and you can see both the outputs are same.

- Here's the video for this convolution calculator in MATLAB:

JLCPCB – Prototype 10 PCBs for $2 (For Any Color)

China’s Largest PCB Prototype Enterprise, 600,000+ Customers & 10,000+ Online Orders Daily

How to Get PCB Cash Coupon from JLCPCB:

Syed Zain Nasir

@syedzainnasir

@syedzainnasir

I am Syed Zain Nasir, the founder of The Engineering Projects (TEP).I am a programmer since 2009 before that I just search things, make small projects and now I am sharing my knowledge through this platform.I also work as a freelancer and did many projects related to programming and electrical circuitry. My Google Profile+

FollowGet Connected

Leave a Reply

Leave a Reply

Overview

In this exercise you will implement a convolutional neural network for digit classification. The architecture of the network will be a convolution and subsampling layer followed by a densely connected output layer which will feed into the softmax regression and cross entropy objective. You will use mean pooling for the subsampling layer. You will use the back-propagation algorithm to calculate the gradient with respect to the parameters of the model. Finally you will train the parameters of the network with stochastic gradient descent and momentum.

We have provided some MATLAB starter code. You should write your code at the places indicated in the files ”YOUR CODE HERE”. You have to complete the following files: cnnCost.m, minFuncSGD.m. The starter code in cnnTrain.m shows how these functions are used.

Dependencies

We strongly suggest that you complete the convolution and pooling, multilayer supervised neural network and softmax regression exercises prior to starting this one.

Step 0: Initialize Parameters and Load Data

In this step we initialize the parameters of the convolutional neural network. You will be using 10 filters of dimension 9x9, and a non-overlapping, contiguous 2x2 pooling region.

We also load the MNIST training data here as well.

Step 1: Implement CNN Objective

Implement the CNN cost and gradient computation in this step. Your network will have two layers. The first layer is a convolutional layer followed by mean pooling and the second layer is a densely connected layer into softmax regression. The cost of the network will be the standard cross entropy between the predicted probability distribution over 10 digit classes for each image and the ground truth distribution.

Step 1a: Forward Propagation

Convolve every image with every filter, then mean pool the responses. This should be similar to the implementation from the convolution and pooling exercise using MATLAB’s conv2 function. You will need to store the activations after the convolution but before the pooling for efficient back propagation later.

Following the convolutional layer, we unroll the subsampled filter responses into a 2D matrix with each column representing an image. Using the activationsPooled matrix, implement a standard softmax layer following the style of the softmax regression exercise.

Step 1b: Calculate Cost

Generate the ground truth distribution using MATLAB’s sparse function from the labels given for each image. Using the ground truth distribution, calculate the cross entropy cost between that and the predicted distribution.

Note at the end of this section we have also provided code to return early after computing predictions from the probability vectors computed above. This will be useful at test time when we wish make predictions on each image without doing a full back propagation of the network which can be rather costly.

Step 1c: Back Propagation

First compute the error, kron function to upsample the error and propagate through the pooling layer.

kronYou can upsample the error from an incoming layer to propagate through a mean-pooling layer quickly using MATLAB's kron function. This function takes the Kroneckor Tensor Product of two matrices. For example, suppose the pooling region was 2x2 on a 4x4 image. This means that the incoming error to the pooling layer will be of dimension 2x2 (assuming non-overlapping and contiguous pooling regions). The error must be upsampled from 2x2 to be 4x4. Since mean pooling is used, each error value contributes equally to the values in the region from which it came in the original 4x4 image. Let the incoming error to the pooling layer be given bykron(delta, ones(2,2)), MATLAB will take the element by element product of each element in ones(2,2) with delta, as below:To propagate error through the convolutional layer, you simply need to multiply the incoming error by the derivative of the activation function as in the usual back propagation algorithm. Using these errors to compute the gradient w.r.t to each weight is a bit trickier since we have tied weights and thus many errors contribute to the gradient w.r.t. a single weight. We will discuss this in the next section.

Step 1d: Gradient Calculation

Compute the gradient for the densely connected weights and bias, W_d and b_d following the equations presented in multilayer neural networks.

In order to compute the gradient with respect to each of the filters for a single training example (i.e. image) in the convolutional layer, you must first convolve the error term for that image-filter pair as computed in the previous step with the original training image. Again, use MATLAB’s conv2 function with the ‘valid’ option to handle borders correctly. Make sure to flip the error matrix for that image-filter pair prior to the convolution as discussed in the simple convolution exercise. The final gradient for a given filter is the sum over the convolution of all images with the error for that image-filter pair.

Matlab Convolution Function

The gradient w.r.t to the bias term for each filter in the convolutional layer is simply the sum of all error terms corresponding to the given filter.

Make sure to scale your gradients by the inverse size of the training set if you included this scale in the cost calculation otherwise your code will not pass the numerical gradient check.

Step 2: Gradient Check

Use the computeNumericalGradient function to check the cost and gradient of your convolutional network. We’ve provided a small sample set and toy network to run the numerical gradient check on.

Convolution In Matlab

Once your code passes the gradient check you’re ready to move onto training a real network on the full dataset. Make sure to switch the DEBUG boolean to false in order not to run the gradient check again.

Convolution Matlab Code

Step 3: Learn Parameters

Using a batch method such as L-BFGS to train a convolutional network of this size even on MNIST, a relatively small dataset, can be computationally slow. A single iteration of calculating the cost and gradient for the full training set can take several minutes or more. Thus you will use stochastic gradient descent (SGD) to learn the parameters of the network.

You will use SGD with momentum as described in Stochastic Gradient Descent. Implement the velocity vector and parameter vector update in minFuncSGD.m.

In this implementation of SGD we use a relatively heuristic method of annealing the learning rate for better convergence as learning slows down. We simply halve the learning rate after each epoch. As mentioned in Stochastic Gradient Descent, we also randomly shuffle the data before each epoch, which tends to provide better convergence.

Step 4: Test

Crack Detection Matlab Code For Convolutional

With the convolutional network and SGD optimizer in hand, you are now ready to test the performance of the model. We’ve provided code at the end of cnnTrain.m to test the accuracy of your networks predictions on the MNIST test set.

Run the full function cnnTrain.m which will learn the parameters of you convolutional neural network over 3 epochs of the data. This shouldn’t take more than 20 minutes. After 3 epochs, your networks accuracy on the MNIST test set should be above 96%.

Linear Convolution In Matlab

Congratulations, you’ve successfully implemented a Convolutional Neural Network!